Research Project DPI2007-66556-C03-03 (2007-2010)

Interpretacion de Escenas 3D Mediante Vision Artificial a Partir del Analisis Ciordinado de Imagenes Adquiridas por un Equipo de Robots Moviles

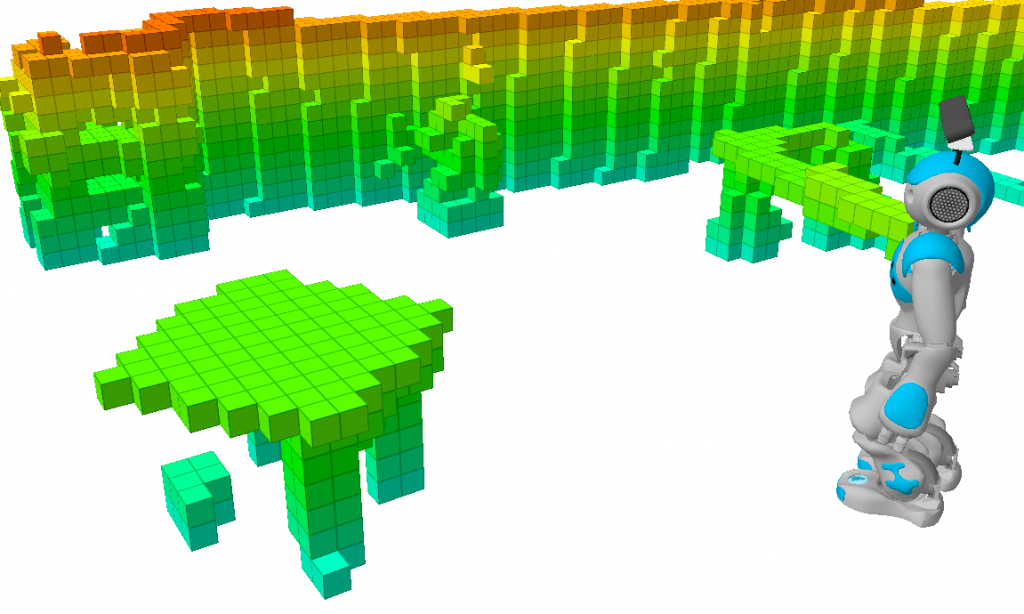

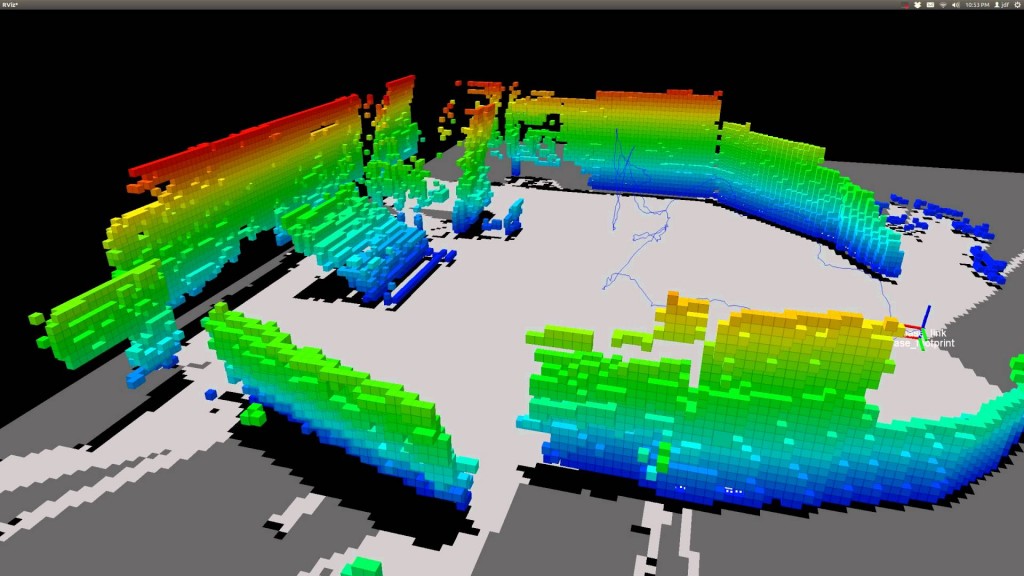

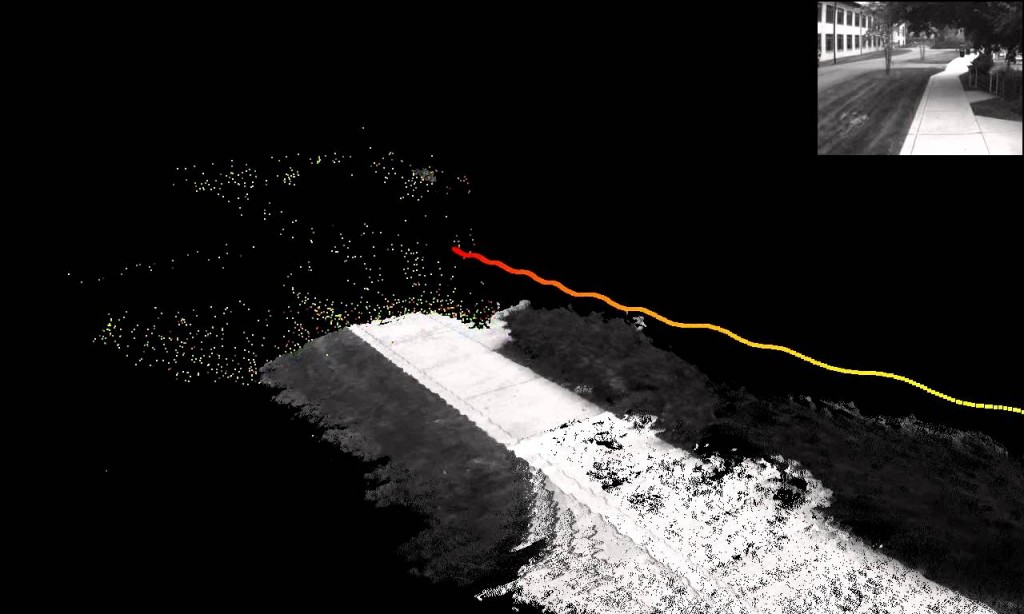

This project aims at designing new computer vision techniques that allow the automatic interpretation of 3D scenes from the analysis of images provided by a team of mobile robots. The new schemes will take advantage of the different images correspondihg to a same region delivered by each member of the team by means of a coordinated inspection. Such a interpretation is expected to contribute toiincreasing the performance of cooperative tasks developed by the team of robots. In this way, the accuracy of both the self-localization of mobile robots in the space and the global 3D model of the environment is desired as a result of those techniques.

In particular, this project nas the following specific objectives:

- Computer Vision Goals: new algorithms with the purpose that robots automatically obtain a manageable description of the objects in a 3D scene and their spatial and topological interrelations. 3D scene interpretation by using the description previously obtained.

- Localization Goals: simultaneous self-localization and mapping techniques with 6 degrees of freedom (SLAM 3D) over unstructured environments. Previous scene interpretation will be especially considered for the accomplishment of this objective.

- Interaction Goals: new techniques for the collaborative exploration of unknown environments through a team of mobile robots, by taking into account previously proposed simulation algorithms.

The team of mobile robots is constituted by a NAO V3 robot, a Pioneer P2-AT robot, a Pioneer P3-AT robot and three Koala robots. The first is a humanoid robot. The other five are wheeled all-terrain robots. Each Koa”a will mount a binocular Color Bumblebee camera. On the other hand, the P2-AT is endowed with a tr nocular Color Digiclops camera, and the P3-AT mounts a binocular Color Bumblebee-2 camera. All 3D cameras will be controlled by embedded computers. All robots will communicate with each other and with external computers through a wireless network.